The Case Against Artificial Intelligence

A Rant From #StopTheRobots

11 March 2016

[EDIT, Feb. 23rd 2017 - The goal of this computer-generated blog post is not to argue against artifical intelligence. AI has many benefits. However, AI also has problems too, and I wanted a blog post to focus on these problems. It is ultimately a call for greater societial regulation of technology, to ensure that AI benefits humanity, not harms it.]

I was ‘inspired’ to write this article because I read the botifesto “How To Think About Bots”. As I thought the ‘botifesto’ was too pro-bot, I wanted to write an article that takes the anti-bot approach. However, halfway through writing this blog post, I realized that the botifesto…wasn’t written by a bot. In fact, most pro-bot articles have been hand-written by human beings. This is not at all a demonstration of the power of AI; after all, humans have written optimistic proclamations about the future since the dawn of time.

If I am to demonstrate that AI is a threat, I have to also demonstrate that AI can be a threat, and to do that, I have to show what machines are currently capable of doing (in the hopes of provoking a hostile reaction).

So this blog post has been generated by a robot. I have provided all the content, but an algorithm (“Prolefeed”) is responsible for arranging the content in a manner that will please the reader. Here is the source code. And as you browse through it, think of what else can be automated away with a little human creativity. And think whether said automation would be a good thing.

Unemployment

In 2013, Oxford Professors Frey and Obsurne argued that robots will replace 70 million jobs in the next 20 years (or 47% of all jobs in the USA). J.P Gownder, an analyst at the Boston-based tech research firm “Forrester”, makes a more optimistic case for technology in 2015, by claiming that by the year 2025, robots will only cause a net job loss of 9.1 million. (Both studies came from Wired.)

J.P. Gownder argued his lower estimate for job loss is because technology will create new jobs. In a Forbes article, J.P. Gowdner justified his viewpoint:

We forecast that 16% of jobs will disspear[sic] due to automation technologies between now and 2025, but that jobs equivalent to 9% of today’s jobs will be created. Physical robots require repair and maintenance professionals — one of several job categories that will grow up around a more automated world. That’s a net loss of 7%: far fewer than most forecasts, though still a significant job loss number.

The same ‘adjustment’ for job gains was also done in a 2016 report by World Economic Forum at Davos. “[D]isruptive labour market changes” (which includes not only AI, but other emerging tech such as 3D printing) could destroy 7.1 million jobs by 2020, while also creating 2 million jobs in smaller industry sectors. This means a net total of 5.1 million jobs lost.

| Study | Net Job Loss/Year |

|---|---|

| Frey and Obsurne (2013) | 3.50 million |

| J.P. Gownder | 0.91 million |

| World Economic Form (2016) | 1.02 millions |

There are some people who claim that we should not worry about robots because we’ll just create brand new jobs out of thin air. To me, they are behaving like a complusive gambler boasting about how he can earn $2.82 by simply gambling away $10.

In the long-term, perhaps, technology may finally erase the deficit in jobs and be seen as a net job producer. But that is exactly why we need to worry about this “transition period” to this ‘long-term’, whenever that may arrive.

How will this new joblessness come into being? Personally, I do not foresee a bunch of people getting laid off immediately. Instead, companies will gradually reduce their hiring. Why hire a new [PROFESSION_NAME_HERE] when you can just get a robot to do the job for you? Existing employees may be deemed ‘obsolete’, but will be retrained with new skills that cannot be automated (yet).

However, an academic paper entitled “The Labor Market Consequences of Electricity Adoption: Concrete Evidence From The Great Depression”, by Miguel Morin, does suggest that technological unemployment will indeed take the form of layoffs. During the Great Depression, the cost of electricity decreased for concrete plants. This increased the productivity of workers. Instead of increasing the production of concrete though, the concrete plants simply fired workers instead, thereby cutting their costs. The Atlantic also wrote several examples where technological unemployment occurred during times of recessions…when companies need to save money, humans get laid off and the cheaper bots come in instead.

I hope I do not need to write out why unemployment is not good.

The “AI Winter”

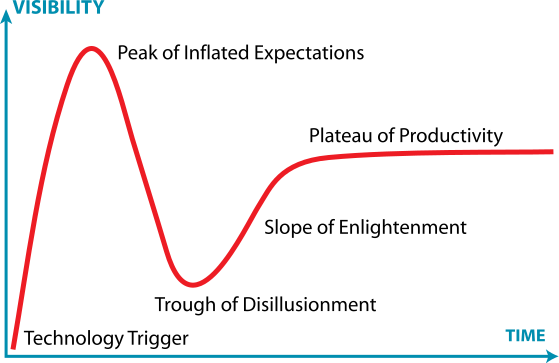

Artificial Intelligence is, ultimately, just a technology. And technologies can often times go through a ‘Hype Cycle’, as coined by the research firm Gartner.

At first, people become very interested in a brand new technology (a “Technology Trigger”). Companies start to over-invest in researching this technology, leading to a “Peak of Inflated Expectations” (i.e, a bubble). But the technology turns out to have major limitations. As a result, investment in the technology dries up (“Trough of Disillusionment”). Most companies either begin laying people off or closing down outright.

Eventually, the survivors soon realize how to use the technology properly (“Slope of Enlightenment”), and we can finally use the technology in our day-to-day life (“Plateau of Productivity”). But as this picture from Wikipedia shows, the visibility of the technology in the Plateau of Productivity is much less than the visibility of that same technology in the Peak of Inflated Expectations. The brand new technology has done great things for us. It’s just not as great as we hoped it to be. And does it justify the extreme waste seen in the “Peak of Inflated Expectations”?

If this is some hypothetical graph, then it’s not much to be worried about. But I have already lived through two tech bubbles: the dot-com bubble of 1997-2000 and the current unicorn bubble (ending this year, at 2016). A cycle of “irrational exuberance” (“Uber for [Plural Nouns]”) followed by layoffs can be never a good thing. Especially if you have to live with the consequences. Any actual benefit caused by this overinvestment is only incidental.

I’m afraid that this hype cycle can only get worse. The major reason the American Real Estate Bubble of 2005-2007 and the current unicorn bubble has grown as big as it did was due to a policy of ‘low interest rates’ pursued by central banks. Investors are ‘encouraged’ not to save money but instead to invest in risky ventures. The current interest in AI suggest that investors may view this new technology as yet another chance to make money. These investors will probably pour way too much money into AI research (if they haven’t started doing so already). And almost all of it will be exposed as wasteful in the “Trough of Disillusionment” stage.

So why do I call the “Trough of Disillusionment” an “AI Winter”? Because I didn’t come up with this name. It was invented in 1984. According to Wikipedia, the AI field had went through two major AI winters (1974-1980, 1987-1993) and several “smaller episodes” as well. Obviously our technology has improved. But human nature has not changed. If an AI bubble inflates, run.

Technological Dependence

Some people believe that AI could serve as a tool that can perform routine, autonomous tasks. This frees up human to handle more creative tasks. Darrel West, the director for technology innovation at the Brookings Institution, embodies this sentiment of techno-optimism by saying:

“It’s good that we’re figuring out how to use robots to make our lives easier. There are tasks they can do very well and that free humans for more creative enterprises.”

For example, robots are very good at writing 9-page textbooks.

Now, I understand that some textbooks can be dry and boring. But it is hard to say that they are not “creative enterprises”. Yet, if you click on my above link, you will actually see Darrel West’s quote, down to his very last words: “more creative enterprises”. Some human journalist told Darrel that a university is building a program that can write textbooks, and Darrel’s only response boils down to: “Oh, that’s cool. More time for creativity then.” (He also points to Associated Press’ own use of bots to write stories about sports to justify his viewpoint as well.)

Does Darrel consider the act of writing not creative then?

Here’s a dystopian idea. The term “creative enterprise” is a euphemism to refer to “any activity that cannot be routinely automated away yet”. Any task that we declare ‘a creative expression of the human experience’ will be seen as ‘dull busywork’ as soon as we invent a bot. We delude themselves into thinking we are still the superior race, while slowly replacing all our usual activities with a bunch of silicon-based tools.

This might be tolerable if your tools work 100% of the time. But abstractions are leaky. If you rely on your tools too much, you open yourself up to terrible consequences if you ever lose access to the tools or your tools wind up malfunctioning. And the worst part is that your tools may fail at the very moment your (human) skills has decayed. After all, you didn’t need to learn “busywork”. You focused all your efforts on mastering “more creative enterprises”.

This skill decay has already happened to pilots. Thanks to the glory of automation on airplanes, the US Department of Transporation believe that many pilots are unable to fly airplanes in times of crises. When autopilot works, then all is well. When autopilot fails, then there’s a real chance that the less capable human pilots make mistakes that winds up crashing the airplane.

Far from AI freeing us to pursue worthier endeavours, it can only make us more dependent on technology and more vulnerable to disasters when that technology breaks. The only good news is that we can reduce our dependency. For example, the US Department of Transportation recommends that pilots should periodically fly their airplanes manually to keep their own skills fresh.

Angst

It is not enough to build robots to handle the tedious tasks of interviewing human beings and hiring them to do routine tasks, but instead, “Algorithms Make Better Hiring Decisions Than Humans”. It is not enough to have a robot be able to cheerfully play board games and find creative strategies, but instead “Google’s AlphaGo AI beats Lee Se-dol again to win Go series, 4-1”. It is not enough to give video game AI the ability to simulate emotional decision-making by keeping track of a bunch of variables and behaving differently based on those variables, but instead “Researchers Make Super Mario Self-Aware”.

Now, some people may argue that these algorithms are not examples of “intelligence”. The obvious conclusion must be that hiring people, beating people at Go, and playing Super Mario must also not be tasks that require intelligence.

One of the problems with dealing with AI is the inherent vagueness of terms used to distinguish “us” (the humans) from “them” (the robots), leading to long and tedious arguments over whether this specific algorithm is an example of “true AI” without ever actually providing a decent definition of what is “true AI”. What hurts matters even more is the AI Effect, where the goal posts are constantly shifting in response to new advances in technology. If you design a test to determine what is “true AI”, and then a machine passes the test, a new test will just get created instead.

Apparently, you see, when they said “a machine will never be able to spot-weld a car together”, they meant to say “a machine will never be aware that it’s welding a car together”.

Some of the goal post shifting is justified: if we build something, we know how it works, and if we know how it works, we can see that it is artificial. And yet, again, at some point, the goal post shifting starts being seen as utterly ridiculous. Please don’t say the act of writing novels is not a sign of intelligence just because NaNoGenMo exists. (In fact, it is very possible that as AI improves, that we may be forced to confront the possibility that intelligence itself may not exist, which seems like a far worse fate than merely accepting the existence of AI.)

One way around this problem is to essentially refuse to mention the term AI at all, and instead use a more neutral term, such as machine learning or expert systems. “Yeah that robot may not meet my arbitrary definition of intelligence, but it is an expert in one specific domain area, and so I’ll defer to its expertise.” Yet the term of AI still continues to capture our imagination.

Why?

I think that our own ego is deeply invested in the idea that we are ‘special’, and that we are concerned when that ‘specialness’ gets challenged. Michael Kearns, an AI researcher at the University of Pennsylvania, claimed that “[p]eople subconsciously are trying to preserve for themselves some special role in the universe”, in an article about an AI being built to conduct scientific experiments.

Now Kearns doesn’t care about protecting the human ego. But I do. I don’t know what would happen to humanity when its self-image crumbles in the face of advanced machine capabilities. But I don’t think it’s something to look forward to.

Botcrime

Consider the following quotes from the botifesto “How To Think About Bots”:

The work and the legal response raise crucial questions. Who is responsible for the output and actions of bots, both ethically and legally? How does semi-autonomy create ethical constraints that limit the maker of a bot?

If you make a bot, are you prepared to deal with the fallout when your tool does something that you yourself would not choose to do? How do you stem the spread of misinformation published by a bot? Automation and “big” data certainly afford innovative reporting techniques, but they also highlight a need for revamped journalistic ethics.

Bots might be effective tools for guiding people toward healthier lifestyles or for spreading information about natural disasters. How can policies allow for civically “good” bots while stopping those that are repressive or manipulative?

And so on and so forth. There are obviously legitimate fears about bots doing evil, either due to its interactions with the outside world or because it has been programmed to do evil by another entity.

Raising questions about bot regulation is troubling though because they imply that these questions must be answered. They do not have to be answered now, of course. But they do have to be answered fairly soon.

Now only must they be answered, in the form of new government regulations, they must also be enforced. A law that is not enforced might as well not exist at all. Considering how successful we are currently in stopping preexisting spambots and social media manipulators, my hopes for effective enforcement of regulations is fairly low.

What is worse is the fact that people will stand in the way of regulations. The authors (all creators of bots) strongly support regulation…except when said regulation might be used against them.

Rumination on bots should also work to avoid policies or perspectives that simply blacklist all bots. These automatons can and might be used for many positive efforts, from serving as a social scaffolding to pushing the bounds of art. We hope to provoke conversation about the design, implementation and regulation of bots in order to preserve these, and other as yet unimagined, possibilities.

Again, a general blacklist of bots is a perfectly horrible idea (mostly because we cannot enforce it). But attempting to sort out ‘good’ bots from ‘bad’ bots seem like a rather dangerous and futile task. I can easily see the emergence of a “pro-bot” lobby that will stand against any but the most token of regulations, using doublespeak to claim that any use of technology has “positive effects”, while excusing away any problems the bots may cause.

Alternatively, we can also see bot developers pitted against each other, decrying other people’s uses of bots as being “negative” while championing their own use of bots as being “positive”. We need to have a legal system that can help evaluate whether bots are good or bad.

According to Ryan Calo though, the American legal system’s views about robots are outdated and ill-suited to our brave new world. Judges generally see robots as “programmable machine[s], by definition incapable of spontaneity”, and ignore possible ‘emergent properties’ that can exist when robots interact with society as a whole.

Ryan Calo support the creation of “a new technology commission, a kind of NASA-for-everything that can act as a repository of knowledge about robots to guide legal actors, including courts”…but this commission seems very similar to that of an interest group, and one that may only have the interests of robot developers at heart, not that of society.

It will take time to come up with the right balance between bot freedom and bot banning, if there really is any.

Conclusion

In 2016, Evans Data conducted a survey of 500 software engineers to find out what they feared the most. The largest plurality (29.1%) said that they feared AI taking their jobs. What’s worse is that over 60% of software engineers thought that AI “would be a disaster”.

This does not mean that these software engineers are Luddites. “[O]ver three-quarters of the developers thought that robots and artificial intelligence would be a great benefit to mankind”, claimed Janel Garvin (the CEO of Evans Data). “Overlap between two groups was clear which shows the ambivalence that developers feel about the dawn of intelligent machines. There will be wonderful benefits, but there will also be some cataclysmic changes culturally and economically.”

There has been a lot of coverage about the rise of AI and its ‘wonderful benefits’. The goal of this post is to illustrate the ‘cataclysmic changes’ and thereby make a implicit argument against AI.

The dangers of AI are great, and we should not let the potentials of AI blind us to real risks. There are some solutions to help manage AI risk that I proposed in the past, but probably the most practical and sensible solution at the moment is to slow down AI development and think through these risks carefully. By slowing down progress, we can ensure that the changes won’t be so “cataclysmic”, and that humanity can survive intact.